A tool used to collect information from respondents.

Questionnaire

A graphic bar that gradually fills as the respondent progresses through the survey.

Progress Indicator

Level of measurement that is the most mathematically precise and has a true zero value.

Ratio

Question type that allows the respondent to select multiple responses.

Checklist

Reliable, not Valid

The "dress rehearsal" of survey administration and procedures.

Pilot Test

The population that the researcher is actually reasonably able to measure.

Accessible Population

When a researcher collects personal information about respondents but states this information will not be provided to anyone outside of the project.

Confidentiality

Response option that asks participants to select from a range of options such as Strongly Agree to Strongly Disagree.

Likert Scale

The response options do not conflict or overlap with each other in any way.

Mutually Exclusive

The measure appears to be a reasonable way to estimate the targeted construct.

Face Validity

When a researcher watches respondents take a survey and notes the actions of the respondent such as hesitation, confusion, and frustration.

Behavior Coding

The "list" of accessible elements of the population from which the researcher conducts the draw of the sample.

Sampling Frame

The degree to which a survey is stressful, complex, and perceived as time consuming.

Respondent Burden

Using strong biased language and/or containing unclear messages that can manipulate or mislead a respondent to answer in a specific way.

Leading Question

Also known as bias, it occurs when the instrument is skewed toward a certain type of measurement. It affects all data collected by the skewed instrument equally.

Systematic Error

The degree of consistency with which two researcher interpret the same data.

Interrater Reliability

The amount of time it takes to complete individual items in the survey as well as the full survey.

Response Latency

Combining two or more different types of survey methodologies into one complex implementation.

Multimode Surveys

Occurs when respondents are confronted with a series of questions similar enough that they might appear redundant, so respondents stop paying attention to differences and answer each similar item in the same way.

Habituation

Occurs when individuals who do not respond, refuse to answer, or are unable to answer specific survey items differ from respondents who are able or willing to answer.

Nonresponse Bias

When response choice encompasses a range of numbers or categories defined in relevance to the question.

Bracketed Categories

When the instrument is truly measuring the construct it was designed to measure, and not some other construct.

Construct Validity

When the researcher encourages pretest respondents to think out loud and voice their ongoing mental reactions, essentially narrating their thought processes while they take the survey.

Cognitive Interview

Surveys in which multiple data on a wide variety of subjects are collected.

Omnibus Surveys

A survey item that applies only to some respondents, those who have provided a specific answer to a previous question.

Contingency Question

Occurs when one person translates the survey into another language, and a second person translates this version back into the original language.

Back Translation

When you expect a respondent to recall events that happened in the past or to count the number of times some event has occurred.

Retrospective Question

Consistency between two different versions of a measure that is probing the same construct - often with just slightly different wording.

Alternate Form Reliability

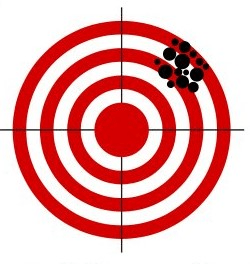

TWO things you could do to limit the bias of individuals choosing earlier options in their response.

Randomize responses or shorten the amount of responses.