Define: Independent Variable (IV), Dependent Variable (DV), and Control Variables

IV: manipulated or controlled by the researcher to observe its effect on another variable

DV: variable being measured in the experiment as a result of variations in the IV

Control Variables: potential confounding factors that are kept constant to ensure a fair test

What is random assignment? How does it help establish internal validity?

Participants are randomly assigned to an experimental group. This ensures that there are no systematic differences between the participants in each group

What are the three criteria for establishing causation?

Covariance (correlation): demonstrated association between the IV and DV

Temporal Precedence: method was designed so that the causal variable clearly comes first in time, before the effect variable

Internal Validity: ability to eliminate alternative explanations for the association

What is the difference between a within-groups and between-groups research design? What are the pros and cons of each design?

Between-groups: participants only experience one level of the IV

- Pros: eliminates potential learning effects, shorter study duration

- Cons: requires more participants, individual differences between participants may impact results

Within-groups: participants experience all levels of the IV

- Pros: requires fewer participants, controls for individual differences, increased statistical power

- Cons: risk of order effects, not always feasible

What are degrees of freedom (df)? What does it represent?

Degrees of Freedom: Number of independent pieces of information used to calculate a statistic, how many values in a data set are "free" to vary when calculating a statistic.

For t-tests, degrees of freedom are linked to the number of observations, or sample size (n-1)!

In an experiment, what is effect size and statistical significance?

Effect Size: magnitude or strength of the relationship between two variable

Statistical Significance: refers to the likelihood that the results observed in a study are not due to random chance

What is the difference between a posttest-only and pretest/posttest design? When would researchers use each type of design?

Main difference lies in when the dependent variable is measured.

Posttest-only: DV measured only after the intervention

- Use When: it's important to avoid the "testing effect" caused by the pretest

Pretest/posttest: DV measured before and after the intervention

- Use When: the primary goal is to measure the change within a group after an intervention

How do large within-groups variances obscure between-groups differences?

When within-groups variance (noise) is large, it is harder to detect the signal (between-groups difference). That is, variability within each group can drown out the effect you are trying to detect.

Signal: The difference between group means (the actual effect of the treatment or intervention).

Noise: The variability within each group, which is unrelated to the intervention but occurs due to individual differences, measurement error, or other factors.

State null and alternative hypothesis:

A researcher wants to know whether violent video games influence aggression. She recruited 10 people, 5 played Grand Theft Auto for 20 minutes (Group 1), and the other 5 played Pac Man (Group 2). She then measured aggressive tendencies (higher scores represent more aggression).

H0 = Violent video games do not influence aggression

H0: μ1 – μ2 = 0 or μ1 = μ2

H1 = Violent video games influence aggression (non-directional)

H1: μ1 – μ2 ≠ 0 or μ1 ≠ μ2

How would you write a directional alternative hypothesis?

Define the null and alternative hypothesis.

Null Hypothesis (H0): default or baseline assumption that there is no effect, no difference, or no relationship between variables

Alternative Hypothesis (Ha): claim that there is an effect, difference, or relationship between variables

What are the four validities? Explain the questions you would have to ask to interrogate each of the four validities.

Internal: Does the study design rule out alternative explanations for the results?

External: How well do the results of a study generalize beyond the sample or setting of the study?

Construct: How well does a test or instrument measures the concept it is intended to measure?

Statistical: Are the conclusions accurately drawn from the statistical analyses?

(low statistical power, violation of statistical assumptions, or incorrect data analysis techniques)

What are the different reasons why a study might result in null effects?

Not enough variance between groups (the IV didn't create a strong enough difference between groups)

Too much variance within groups (there's a lot of random noise or variability within each group, making it hard to see any differences between them

A true null effect (the IV simply doesn't have an effect on the DV)

How do quasi-experiments differ from true experiments? What are the primary tradeoffs between experiments and quasi-experiments?

True experiments: participants are randomly assigned to different groups

Quasi-experiments: not assigned randomly in a quasi-experiment, participants assigned to groups based on pre-existing characteristics (e.g., age, gender, race)

Tradeoffs: Experiments offer stronger causal control but often at the expense of real-world applicability, while quasi-experiments are more realistic but less certain in isolating cause and effect. Experiments provide the most rigorous test of causality, but quasi-experiments are more practical and ethical when random assignment isn't feasible. Experiments reduce selection bias through random assignment, whereas quasi-experiments face higher bias risk and must rely on statistical adjustments.

Under what circumstances do you select: z-test, one-sample t-test, independent samples t-test, paired samples t-test

Z-test: comparing the mean of a single large sample to a known population mean and standard deviation

One-sample t-test: comparing mean of a single sample to a known population mean and unknown standard deviation

Independent samples t-test: comparing means of two independent group among one IV

Paired samples t-test: comparing the means of two conditions or time points on the same participant/subject

What is the assumption of homogeneity of variance? Why do we need to test for it in independent samples t-tests?

Homogeneity of variance assumes the level of variance for a particular variable is constant across the sample.

Independent samples t-tests: Levene's Test

If p-value for the Levene's test is greater than .05, variances are not significantly different from each other (homogeneity assumption of variance is met)

What are the three criteria for establishing causation?

Covariance (correlation): demonstrated association between the IV and DV

Temporal Precedence: method was designed so that the causal variable clearly comes first in time, before the effect variable

Internal Validity: ability to eliminate alternative explanations for the association

What are comparison groups and double-blind studies? How do they help researchers avoid threats to internal validity?

Comparison Groups: group in an experiment that does not receive the treatment or intervention being studied. Used to determine whether the treatment caused a significant change

Double-blind Studies: participants nor the researchers conducting the study know who is in the experimental group and who is in the control group. Prevents bias.

What are the eight different threats to internal validity?

History: occurs when an external, historical event happens for everyone in a study at the same time as the treatment

Maturation: occurs when in an experiment or quasi-experiment with a pretest and posttest, an observed change could have emerged more or less spontaneously over time (internal changes)

Regression: Occurs when in an experiment or quasi-experiment with a pretest and posttest, an observed change could have emerged more or less spontaneously over time (external changes)

Attrition: Occurs when people drop out of a study over time

Testing Threats: When researchers measure participants more than once, they need to be concerned about testing threats to internal validity (e.g., have they gotten used to/better at the same measure? Is it unreliable/not valid? etc)

Instrumentation: Threat to internal validity that occurs when a measuring instrument changes over time

Observer Bias: Bias that occurs when observer expectations influence the interpretation of participant behaviors or the outcome of the study

Demand characteristics: Cue that leads participants to guess a study’s hypothesis or goals; a threat to internal validity

Identify APA-formatting errors below (paired-sample t test):

Students scored significantly higher in psychology knowledge after completing their SMP (M = 7.5) than when they were in Intro (M = 6), t(3) = 5.2, p < .05, estimated d = 2.58 representing a large effect. The mean change in scores was 1.5 points lower in Intro, 95% CI [-2.42, -.58]. Ninety-five percent of the variability in psychology knowledge scores is explained by where they were in the major (intro or SMP level).

Errors:

-Missing SD

-Cohen's d not italicized

-"Ninety-five percent" should be "95%"

-Inconsistent decimal points

Correct statement:

Students scored significantly higher in psychology knowledge after completing their SMP (M = 7.5, SD = 1.73) than when they were in Intro (M = 6, SD = 1.41), t(3) = 5.2, p < .05, estimated d = 2.58 representing a large effect. The mean change in scores was 1.5 points lower in Intro, 95% CI [-2.42, -.58]. 90% of the variability in psychology knowledge scores is explained by where they were in the major (intro or SMP level).

Why do Z and T distributions differ? How does this impact hypothesis testing?

Z-distribution: More precise estimates, larger samples, and population standard deviation is know

T-distribution: Less precise estimates, smaller samples, population standard deviation is unknown

Define: design confounds, selection effects, order effects (threats to internal validity)

Understand how experimenters can avoid each

Design confounds: occurs when a second variable happens to vary systematically along with the intended independent variable

- How To Avoid: carefully control all variables except the IV, ensure all aspects of the experiment are the same across conditions

Selection effects: kinds of participants at one level of the IV are systematically different from those at the other level (independent-groups designs only)

- How To Avoid: use random assignment, if random assignment isn't possible use matched groups

Order effects: threat to internal validity in which exposure to one condition changes participant responses to a later condition (within-groups designs only)

- How To Avoid: counterbalance the order of conditions, allow rest periods between conditions, randomize the order of tasks

Define: measurement error, individual differences, situation noise

(causes of within-groups variance)

Understand how experimenters can avoid each

Measurement error: A human or instrument factor that can randomly inflate or deflate a person’s true score on the dependent variable (unreliable/unprecise tools)

Individual differences: Psychological characteristics that distinguish one person from another (e.g., intelligence, personality traits, and values)

Situation noise: Unrelated events or distractions in the external environment that create unsystematic variability within groups in an experiment

Define: Non-equivalent control groups design, interrupted time-series design, and nonequivalent groups interrupted time-series design

Non-equivalent control groups design: at least one treatment group, one comparison group, participants have not been randomly assigned to the two groups, at least one pretest and one posttest

Interrupted time-series design: quasi-experiment in which participants are measured repeatedly on a DV before, during, and after the “interruption” caused by some event

Nonequivalent groups interrupted time-series design: at least one treatment group, one comparison group, participants have not been randomly assigned to the two groups, participants are measured repeatedly on a DV before, during, and after the “interruption” caused by some event, and the presence or timing of the interrupting event differs among the groups

In a t-test, what does the numerator represent? What does the denominator represent? What factors affect each?

Numerator: represents difference between the means of the two groups being compared, affected by magnitude of the difference between group means

Denominator: represents standard error (SE) of the difference in means, accounts for the variability in the data due to chance, affected by sample size

larger sample size → leads to smaller SE!

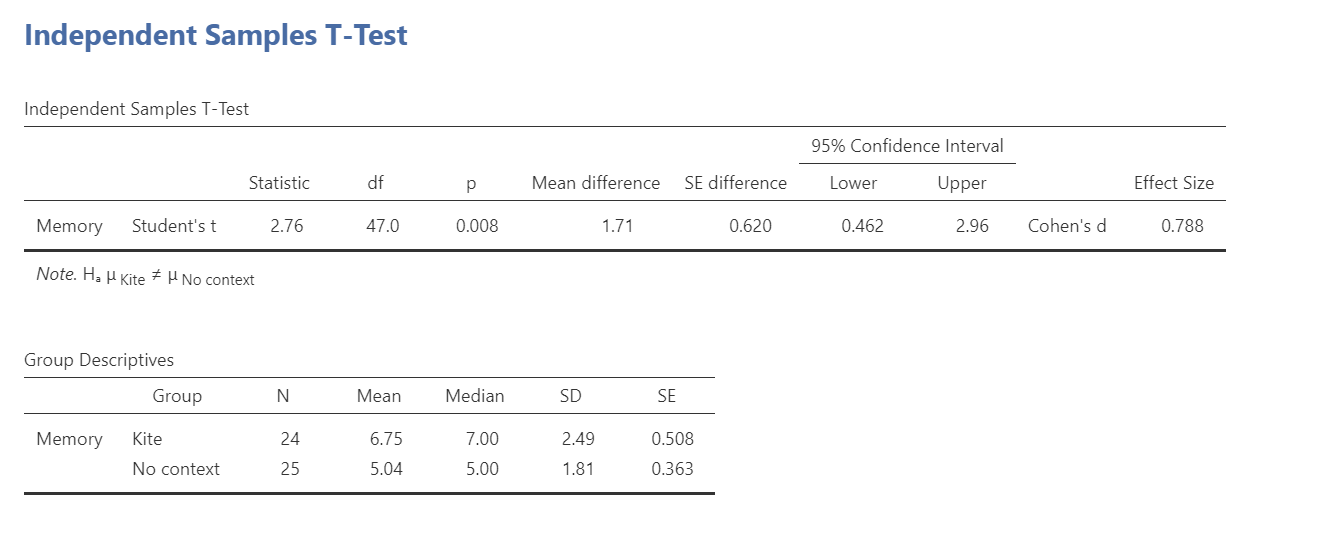

Calculate r-squared for the independent samples t-test below

R-squared calculation:

r2 = t2 / (t2 + df)

r2 = 2.762 / (2.762 + 47)

r2 = 2.762 / (2.762 + 47)

r2 = 7.6176 / (7.6176 + 47)

r2 = 7.6176 / 54.6176

r2 = 0.13947152566 = 0.14

About 14% of the variance in the dependent variable is explained by the independent variable